The Sounds~Write linguistic phonic teaching programme was conceived and written in 2002/3. An essential component of the authors’ thinking about literacy tuition is that all teachers of literacy deserve high quality training. This is needed to help dispel the many myths and inaccuracies pervading teaching practices that stem from a variety of sources including: personal educational experiences of schooling from childhood, BEd and Teaching Certificate courses, local authority advisers, Department of Education publications up to and including Letters & Sounds, and Ofsted inspectors. We have been providing precisely this level of high quality training since 2003, during which time we have run more than 300 courses, attended by over 6000 teachers, teaching assistants and other education professionals.

Central to our thinking about literacy tuition is that all education professionals need accurate feedback about the effectiveness of teaching ideas and programmes. We therefore determined from the outset to encourage schools to collect data on the performance of their Sounds~Write taught pupils. Happily, like us, most teachers are concerned about the lack of good evidence to underpin their understanding of what actually works in the classroom. Consequently many schools containing hundreds of individual classroom teachers trained in Sounds~Write have been willing to test their pupils and send their data to us to evaluate the progress of their pupils.

At the beginning of our data collection procedure we had in mind the goal of trying to collect information on a pupil sample equivalent in size to 50 full classes of 30 pupils passing through Key Stage One. The data collected in June 2009 completed this project enabling us to report on the progress of 1607 individual pupils for whom we have spelling test results obtained during May to June at the end of each of their YR, Y1 and Y2 school years.

We hope that you will find the results and discussion of them both interesting and illuminating. It would be interesting to compare the results of this research with similar information about other literacy tuition programmes in use throughout UK schools, but we are not aware that any such data exists. This sadly reflects the fact that most literacy tuition practices in the English speaking world are based on beliefs, rather than any real evidence of their effectiveness.

Overview of main results

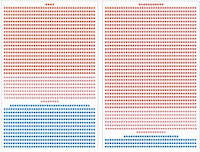

This study has produced an enormous amount of data. To avoid the reader drowning in figures we are initially presenting the overall results in the form of a simple visual picture where each pupil in the study is represented by a single dot. Each red dot represents a pupil scoring at or above their own chronological age level, whilst each pink dot represents a pupil scoring below their own chronological age level, but by no more than 6 months. Each dark blue dot represents a pupil scoring more than 6 months below their own chronological age level.

The box on the left shows the expected outcome for 1607 pupils at the end of Year Two based on the norms of the Young’s Parallel Spelling Test* used to evaluate their progress. The box on the right shows the actual results of the 1607 pupils in the study taught by staff trained in Sounds~Write.

By the end of Key Stage One 413 pupils (25•7%) who traditionally would have been expected to score below their chronological ages have actually scored above them.

Including the pupils who scored below, but within 6 months of their actual age level (252 pupils), we get to a grand total of 1463 out of the 1607 that will be moving up to Key Stage Two with basic literacy skills at an age appropriate level, or above. This amounts to 91% of the children in the study.

What has happened is an improvement in the literacy functioning ability of the whole cohort: all pupils show improvement when compared with the progress made in the past by those pupils on whom the test was originally standardised. Much research in this area is short term, looking for effects on pupils based upon interventions of less than 6 months. These effects often seem to mysteriously disappear over the following 12 months. Results are also frequently presented as average scores that can mask the fact that the positive effects claimed were achieved solely by the pupils at the upper end of the literacy ability range, leaving those functioning at the lower end no better off, and additionally even further behind those that are doing well. Sounds~Write was written with its main goal being the improvement and acceleration of the development of literacy skills for ALL pupils, and tracking this large sample of children individually through Key Stage One has demonstrated that this goal has largely been achieved.

Gender differences in speed of literacy skills acquisition are a continuing problem for the National Literacy Strategy because of the relatively poor performance of boys. With the linguistic phonic teaching used in this study this has not proved to be the case. For those pupils developmentally ready to engage with formal literacy tuition at the time of school entry, the performance of the boys and girls is very similar with girls pulling ahead over the three years by only about 1•5 months at the end of Year Two (a difference of no pedagogical consequence). It is, however, apparent from the data that about 18.6% of pupils were not really ready to engage intellectually with formal tuition when starting their Reception Year. More of these pupils were boys than girls, as would be predicted from basic knowledge about gender differences in early development, particularly speech and language. The data shows almost twice as many boys as girls were not ready to benefit fully from the formal tuition of literacy in Reception (the actual figures being 195 boys to 104 girls. The boys to girls ratio is therefore close to 1•9: 1•0).

These results will soon be available in very close detail on the Sounds-Write website. Watch this space.